Saving Cloud Costs with Kubernetes on AWS

Posted: | More posts about aws kubernetes

How to save cloud costs when running Kubernetes? There is no silver bullet, but this blog post describes a few tools to help you manage resources and reduce cloud spending.

I wrote this post with Kubernetes on AWS in mind, but it will apply in (nearly) exactly the same way for other cloud providers. I assume that your cluster(s) have cluster autoscaling (cluster-autoscaler) configured. Deleting resources and scaling down deployments will only save costs if it also shrinks your fleet of worker nodes (EC2 instances).

This post will cover:

cleaning up unused resources (kube-janitor)

scaling down during non-work hours (kube-downscaler)

using horizontal autoscaling (HPA)

reducing resource slack (kube-resource-report, VPA)

using Spot instances

Clean up unused resources

Working in a fast-paced environment is great. We want tech organizations to accelerate. Faster software delivery also means more PR deployments, preview environments, prototypes, and spike solutions. All deployed on Kubernetes. Who has time to clean up test deployments manually? It's easy to forget about deleting last week's experiment. The cloud bill will eventually grow because of things we forget to shut down:

Kubernetes Janitor (kube-janitor) helps to clean up your cluster. The janitor configuration is flexible for both global and local usage:

Generic cluster-wide rules can dictate a maximum time-to-live (TTL) for PR/test deployments.

Individual resources can be annotated with

janitor/ttl, e.g. to automatically delete a spike/prototype after 7 days.

Generic rules are defined in a YAML file. Its path is passed via the --rules-file option to kube-janitor.

Here an example rule to delete all namespaces with -pr- in their name after two days:

- id: cleanup-resources-from-pull-requests resources: - namespaces jmespath: "contains(metadata.name, '-pr-')" ttl: 2d

To require the application label on Deployment and StatefulSet Pods for all new Deployments/StatefulSet in 2020, but still allow running tests without this label for a week:

- id: require-application-label # remove deployments and statefulsets without a label "application" resources: - deployments - statefulsets # see http://jmespath.org/specification.html jmespath: "!(spec.template.metadata.labels.application) && metadata.creationTimestamp > '2020-01-01'" ttl: 7d

To run a time-limited demo for 30 minutes in a cluster where kube-janitor is running:

Another source of growing costs are persistent volumes (AWS EBS). Deleting a Kubernetes StatefulSet will not delete its persistent volumes (PVCs). Unused EBS volumes can easily cause costs of hundreds of dollars per month. Kubernetes Janitor has a feature to clean up unused PVCs. For example, this rule will delete all PVCs which are not mounted by a Pod and not referenced by a StatefulSet or CronJob:

# delete all PVCs which are not mounted and not referenced by StatefulSets - id: remove-unused-pvcs resources: - persistentvolumeclaims jmespath: "_context.pvc_is_not_mounted && _context.pvc_is_not_referenced" ttl: 24h

Kubernetes Janitor can help you keep your cluster "clean" and prevent slowly growing cloud costs. See the kube-janitor README for instructions on how to deploy and configure.

Scale down during non-working hours

Test and staging systems are usually only required to run during working hours. Some production applications, such as back office/admin tools, also require only limited availability and can be shut down during the night.

Kubernetes Downscaler (kube-downscaler) allows users and operators to scale down systems during off-hours. Deployments and StatefulSets can be scaled to zero replicas. CronJobs can be suspended. Kubernetes Downscaler is configured for the whole cluster, one or more namespaces, or individual resources. Either "downtime" or its inverse, "uptime", can be set. For example, to scale down everything during the night and the weekend:

image: hjacobs/kube-downscaler:20.4.3 args: - --interval=30 # do not scale down infrastructure components - --exclude-namespaces=kube-system,infra # do not downscale ourselves, also keep Postgres Operator so excluded DBs can be managed - --exclude-deployments=kube-downscaler,postgres-operator - --default-uptime=Mon-Fri 08:00-20:00 Europe/Berlin - --include-resources=deployments,statefulsets,stacks,cronjobs - --deployment-time-annotation=deployment-time

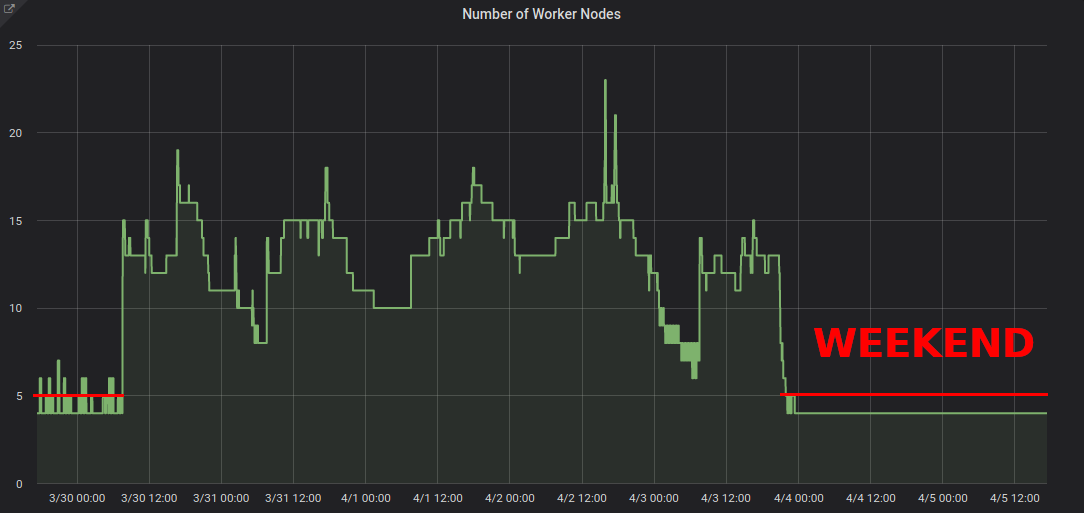

Here a graph of cluster worker nodes scaling down on the weekend:

Scaling down from ~13 to 4 worker nodes certainly makes a difference on the AWS bill.

What if I need to work during the cluster's "downtime"? Specific deployments can be permanently excluded from downscaling by adding the annotation downscaler/exclude: true.

Deployments can be temporarily excluded by using the downscaler/exclude-until annotation with an absolute timestamp in the format YYYY-MM-DD HH:MM (UTC).

If needed, the whole cluster can be scaled back up by deploying a Pod with a downscaler/force-uptime annotation, e.g. by running a dummy nginx deployment:

kubectl run scale-up --image=nginx kubectl annotate deploy scale-up janitor/ttl=1h # delete the deployment after one hour kubectl annotate pod $(kubectl get pod -l run=scale-up -o jsonpath="{.items[0].metadata.name}") downscaler/force-uptime=true

Check the kube-downscaler README for deployment instructions and additional options.

Use horizontal autoscaling

Many applications/services deal with a dynamic load pattern: sometimes their Pods idle and sometimes they are at their capacity. Running always with a fleet of Pods to handle the maximum peak load is not cost efficient. Kubernetes supports horizontal autoscaling via the HorizontalPodAutoscaler (HPA) resource. CPU usage is often a good metric to scale on:

apiVersion: autoscaling/v2beta2 kind: HorizontalPodAutoscaler metadata: name: my-app spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: my-app minReplicas: 3 maxReplicas: 10 metrics: - type: Resource resource: name: cpu target: averageUtilization: 100 type: Utilization

Zalando created a component to easily plug in custom metrics to scale on:

Kube Metrics Adapter (kube-metrics-adapter) is a general purpose metrics adapter for Kubernetes that can collect and serve custom and external metrics for Horizontal Pod Autoscaling.

It supports scaling based on Prometheus metrics, SQS queues, and others out of the box.

For example, to scale a deployment on a custom metric exposed by the application itself as JSON on /metrics:

apiVersion: autoscaling/v2beta2 kind: HorizontalPodAutoscaler metadata: name: myapp-hpa annotations: # metric-config.<metricType>.<metricName>.<collectorName>/<configKey> metric-config.pods.requests-per-second.json-path/json-key: "$.http_server.rps" metric-config.pods.requests-per-second.json-path/path: /metrics metric-config.pods.requests-per-second.json-path/port: "9090" spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: myapp minReplicas: 1 maxReplicas: 10 metrics: - type: Pods pods: metric: name: requests-per-second target: averageValue: 1k type: AverageValue

Configuring horizontal autoscaling with HPA should be one of the default actions to increase efficiency for stateless services. Spotify has a presentation with their learnings and recommendations for HPA: Scale Your Deployments, Not Your Wallet.

Reduce resource slack

Kubernetes workloads specify their CPU/memory needs via "resource requests".

CPU resources are measured in virtual cores or more commonly in "millicores", e.g. 500m denoting 50% of a vCPU.

Memory resources are measured in Bytes and the usual suffixes can be used, e.g. 500Mi denoting 500 Mebibyte.

Resource requests "block" capacity on worker nodes, i.e. a Pod with 1000m CPU requests on a node with 4 vCPUs will leave only 3 vCPUs available for other Pods. [1]

Slack is the difference between the requested resources and the real usage. For example, a Pod which requests 2 GiB of memory, but only uses 200 MiB, has ~1.8 GiB of memory "slack". Slack costs money. We can roughly say that 1 GiB of memory slack costs ~$10/month. [2]

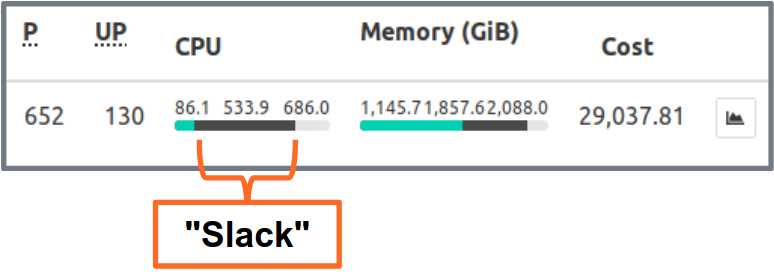

Kubernetes Resource Report (kube-resource-report) displays slack and can help you identify saving potential:

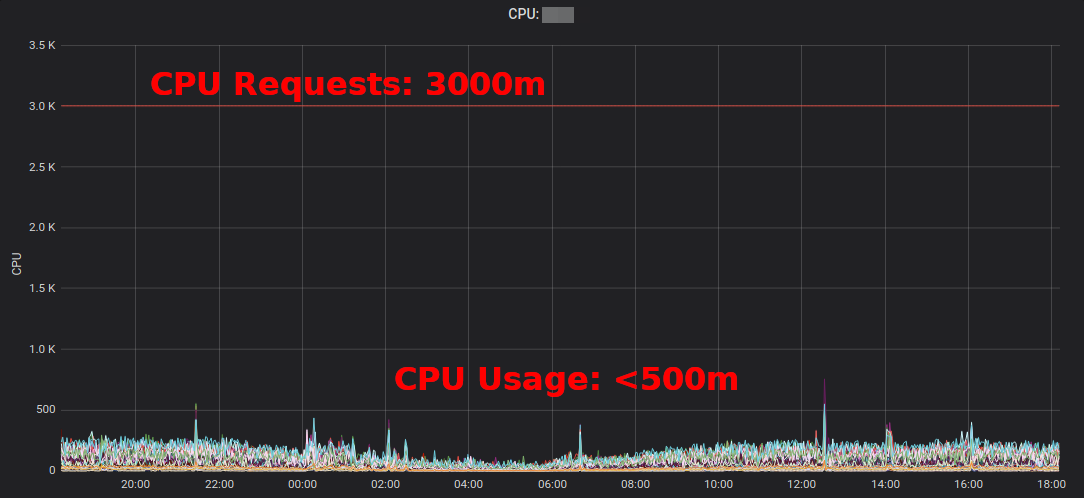

Kubernetes Resource Report shows slack aggregated by application and team. This allows finding opportunities where resource requests can be lowered. The generated HTML report only provides a snapshot of resource usage. You need to look at CPU/memory usage over time to set the right resource requests. Here a Grafana chart of a "typical" service with a lot of CPU slack: all Pods stay well below the 3 requested CPU cores:

Reducing the CPU requests from 3000m to ~400m frees up resources for other workloads and allows the cluster to scale down.

"The average CPU utilization of EC2 instances often hovers in the range of single-digit percentages" writes Corey Quinn. While EC2 Right Sizing might be wrong, changing some Kubernetes resource requests in a YAML file is easy to do and can yield huge savings.

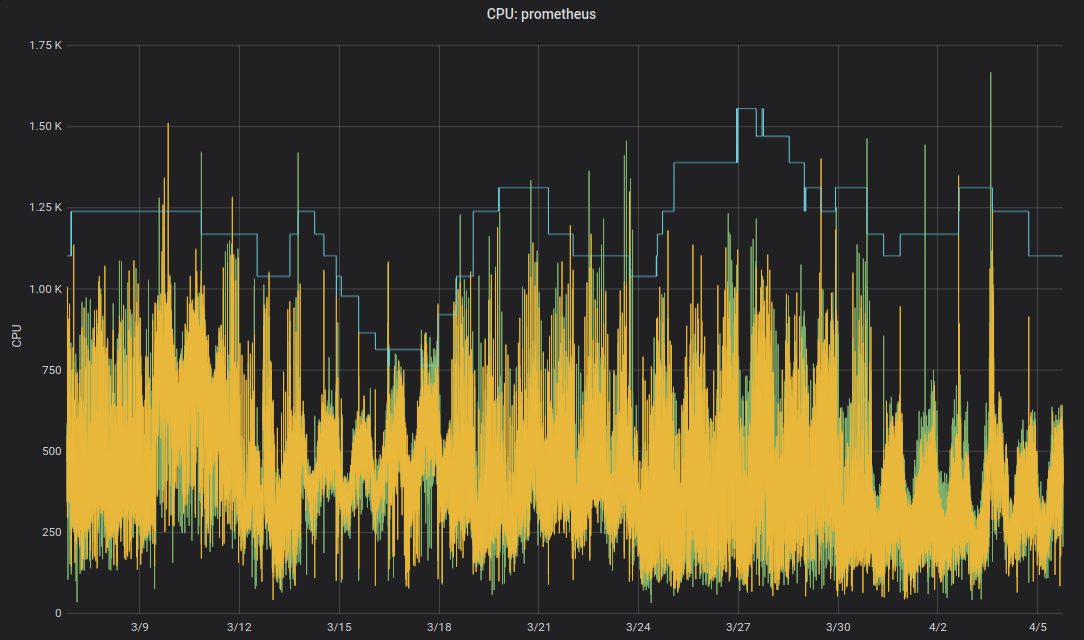

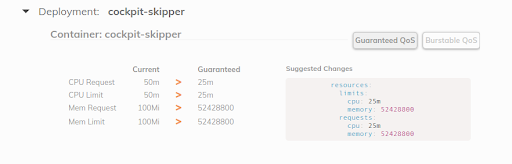

But do we really want humans to change numbers in YAML files? No, machines can do this better! The Kubernetes Vertical Pod Autoscaler (VPA) does exactly this: adapt resource requests and limits to match the workload. Here some example graph of Prometheus' CPU requests (thin blue line) adapted by VPA over time:

Zalando uses the VPA in all its clusters for infrastructure components. Non-critical applications can also use VPA.

Fairwind's Goldilocks is a tool creating a VPA for each deployment in a namespace and then shows the VPA recommendation on its dashboard. This can help developers set the right CPU/memory requests for their application(s):

I wrote a short blog post on VPA in 2019 and a recent CNCF End User Community call discussed VPA.

Use EC2 Spot instances

Last, but not least, AWS EC2 costs can be reduced by using Spot instances as Kubernetes worker nodes [3]. Spot Instances are available at up to a 90% discount compared to On-Demand prices. Running Kubernetes on EC2 Spot is a good combination: you want to specify multiple different instance types for higher availability, i.e. you might get a bigger node for the same or lower price and the increased capacity can be leveraged by Kubernetes' containerized workloads.

How to run Kubernetes on EC2 Spot? There are multiple possibilities: using a 3rd party service like SpotInst (now called "Spot", don't ask me why) or by simply adding a Spot AutoScalingGroup (ASG) to your cluster. For example, here a CloudFormation snippet for a "capacity-optimized" Spot ASG with multiple instance types:

MySpotAutoScalingGroup: Properties: HealthCheckGracePeriod: 300 HealthCheckType: EC2 MixedInstancesPolicy: InstancesDistribution: OnDemandPercentageAboveBaseCapacity: 0 SpotAllocationStrategy: capacity-optimized LaunchTemplate: LaunchTemplateSpecification: LaunchTemplateId: !Ref LaunchTemplate Version: !GetAtt LaunchTemplate.LatestVersionNumber Overrides: - InstanceType: "m4.2xlarge" - InstanceType: "m4.4xlarge" - InstanceType: "m5.2xlarge" - InstanceType: "m5.4xlarge" - InstanceType: "r4.2xlarge" - InstanceType: "r4.4xlarge" LaunchTemplate: LaunchTemplateId: !Ref LaunchTemplate Version: !GetAtt LaunchTemplate.LatestVersionNumber MinSize: 0 MaxSize: 100 Tags: - Key: k8s.io/cluster-autoscaler/node-template/label/aws.amazon.com/spot PropagateAtLaunch: true Value: "true"

Some remarks on using Spot with Kubernetes:

You need to handle Spot terminations, e.g. by draining the node on instance shutdown

Zalando uses a fork of the official cluster autoscaler with node pool priorities

Spot nodes can be tainted to allow workloads to "opt-in" to run on Spot

Summary

I hope you find some of the presented tools useful to reduce your cloud bill. You can find most of the blog post contents also in my DevOps Gathering 2019 talk on YouTube and as slides.

What are your good practices for cloud cost savings and Kubernetes? Please tell me and reach out on Twitter (@try_except_).